Top News

Tools

Machine learning is the driving force behind innovative applications in the cloud. The capabilities of machine learning models allow developers to create cutting-edge technologies that enable businesses to make better decisions, improve customer experiences, and automate processes. The scalability of cloud computing makes it an ideal platform for machine learning applications. The combination of machine learning and the cloud is already transforming industries and shows no signs of slowing down anytime soon.

-

Cloud Computing for Financial Services

Monday, 30 October 2023

-

Mobile App Development in the Cloud: Opportunities and Innovation

Monday, 09 October 2023

-

Cloud Computing for Healthcare Industry

Monday, 25 September 2023

-

Cloud Computing and Virtualization: The Powerhouse of Modern Technology

Monday, 11 September 2023

Storage

Cloud computing has significantly altered many things in our lives. It has changed the way we do things, the leading being how we do business and conduct different activities in our lives. Unlike a decade ago when this technology was just a buzzword in IT, it has made its way into several industries bringing substantial change wherever it has been adopted. Back then, there was little knowledge about this emerging technology, so people asked questions about what it is and the changes it is likely to bring. Although many of these questions have been answered, many others still emerge. Here are some key cloud computing stats that might help.

-

AWS Releases The Open Source Library AutoGluon For AI Development

Monday, 10 February 2020

-

Containers Are An Important Tool For Developers Deploying To The Cloud, But Look Out For These Vulnerabilities

Monday, 16 September 2019

-

How the Cloud Leverages Open-Source Solutions

Wednesday, 11 September 2019

-

Managing Your Cloud Computing Costs

Wednesday, 13 March 2019

Serverless Computing Takes Off

Serverless computing has emerged as one of the hottest tech trends in recent years, offering businesses and developers a new way to build and deploy applications without having to worry about servers or infrastructure. As more companies shift their workloads to the cloud, they are increasingly adopting serverless architectures to achieve greater flexibility, scalability, and cost efficiencies.

The Rise of Serverless

The serverless computing market continues on a steep growth trajectory as more companies find compelling use cases for the technology. According to Markets and Markets, the global serverless architecture market size is projected to grow from $7.6 billion in 2020 to $36 billion by 2026, at a compound annual growth rate of almost 32%. Serverless allows teams to focus on writing code and building applications without spending time and resources worrying about infrastructure or operations. The managed nature of serverless also makes it simpler for companies to experiment, deploy changes quickly, and scale rapidly up or down based on real-time demands.

Key Benefits Driving Adoption

There are several key benefits leading businesses to deploy serverless architectures:

Cost Savings: With serverless computing, companies only pay for the exact amount of resources used to execute a particular function when it is invoked. Companies see significant savings by not having to overprovision capacity upfront to handle peak loads. Estimates show serverless can result in cost savings of 70-90% compared to running workloads on traditional servers.

Agility and Speed: Serverless enables faster development cycles and change deployment since developers don’t have to make requests to IT or wait for infrastructure modifications. New functions can be written and deployed in seconds. This leads to greater business agility.

Scalability: Serverless applications can automatically scale up or down based on usage, allowing them to easily handle sudden spikes in traffic or events like Black Friday without capacity planning. The service provider handles scaling seamlessly.

Operational Efficiency: Serverless offloads responsibilities like capacity provisioning, patching, scaling and more to the cloud provider, freeing up internal IT resources and allowing companies to focus innovation rather than maintenance.

Use Cases Emerging Across Industries

Companies across industries and of all sizes have found success using serverless for a growing list of use cases:

• Data processing & analytics

• Web & mobile applications

• IoT backends

• Chatbots

• Event-driven workflows

• API development

Leading cloud providers like AWS, Microsoft Azure, and Google Cloud offer serverless platforms companies can build on. Industries leading serverless adoption include financial services, healthcare, retail, media & entertainment, manufacturing and more.

The Future of Serverless

As developers gain confidence in serverless and cloud providers expand their tooling and capabilities, adoption is expected to rapidly increase. Gartner predicts that by 2025, more than 75% of mid to large-size companies will have adopted some form of serverless computing. This shift allows companies to accelerate innovation, reduce costs, and future-proof their architectures. While still evolving, serverless is proving to be an enterprise-grade solution able to power mission-critical workloads while unlocking agility, scalability and efficiency advantages. The promise and business benefits of serverless ensure it will continue its upward growth trajectory as a core cloud service model well into the future.

Cloud Computing for Remote Workforces

Cloud computing has revolutionized the way we work, enabling remote workforces to thrive. With the increasing demand for flexibility and agility in the workplace, businesses have turned to cloud applications to take advantage of the numerous benefits they offer.

Before remote work became a popular trend, cloud-based networks were already being utilized by digitally-mature organizations. These networks provided individuals with the ability to work independently, thanks to technologies such as email, messaging tools, mobile applications, progressive web apps (PWAs), and even Voice over IP (VOIP) telephony. The adaptability and agility of cloud-based technology made it easier for businesses to embrace remote work, leading to increased productivity and efficiency.

One of the primary benefits of cloud computing for remote work is the convenience and accessibility it provides. With cloud applications, employees can access their work from anywhere, at any time, using any device with an internet connection. This flexibility allows them to work remotely without any limitations, which is especially beneficial for individuals who prefer a work-from-home setup or those who need to travel frequently for work.

Another advantage of cloud computing for remote workforces is the improved collaboration capabilities it offers. With cloud-based tools and platforms, teams can easily collaborate, share files, and work on projects together in real-time. This eliminates the need for physical meetings and enables seamless communication and collaboration, regardless of the employees' physical locations. This level of collaboration is essential in remote work scenarios, as it promotes teamwork and ensures that all team members are on the same page.

Security is another crucial aspect that businesses consider when implementing cloud computing for remote workforces. In the past, there were concerns about the security of cloud applications, especially when handling sensitive data. However, as technology has advanced, cloud providers have developed robust security measures to protect data and prevent unauthorized access. These security measures include encryption, multi-factor authentication, regular backups, and strict access controls. Implementing these security measures ensures that remote workers can confidently work on cloud-based platforms without compromising the security of their data or the organization's sensitive information.

Cost-effectiveness is yet another benefit of cloud computing for remote workforces. With cloud applications, businesses can save on infrastructure costs, as they no longer need to maintain on-premises servers or invest in expensive hardware and software. Cloud service providers handle the infrastructure requirements, and businesses only pay for the resources they consume, making it a more cost-effective solution for remote workforces.

Furthermore, cloud computing allows for scalability and flexibility. As a business's needs change and grow, cloud resources can be easily scaled up or down to accommodate the changing requirements. This scalability is particularly beneficial for remote workforces, as it allows companies to quickly adapt to fluctuations in workload and accommodate the growing needs of remote employees.

In conclusion, cloud computing has become an essential tool for remote workforces. The flexibility, accessibility, improved collaboration capabilities, security, cost-effectiveness, and scalability it offers make it an ideal solution for businesses embracing remote work. As we continue to witness the changes in the workplace, it is safe to say that cloud computing will play a significant role in meeting the needs of remote workforces and ensuring their success. [1][2]

Cloud Computing and Infrastructure as a Service (IaaS)

Cloud computing has transformed the way businesses and individuals approach computing. In simple terms, cloud computing refers to the delivery of computing services over the internet. It offers an easy and cost-effective way to access computing resources without the need for on-premises infrastructure.

One of the main types of cloud computing services is Infrastructure as a Service (IaaS). IaaS provides users with access to computing resources such as virtual machines, storage, and networking. With IaaS, users can rent these resources and use them to build their own computing infrastructure.

An analogy to understand IaaS is renting an empty apartment. When you rent an apartment, you have the space and resources, but you are responsible for everything else, including setting up furniture, utilities, and security. Similarly, with IaaS, you have access to the computing resources, but you are responsible for configuring and managing them.

Amazon Web Services (AWS) is a popular example of IaaS. AWS allows users to rent virtual machines, storage, and networking resources and use them to build their own computing infrastructure. This gives users flexibility and scalability in their infrastructure needs.

IaaS offers several benefits to businesses and individuals. Firstly, it eliminates the need for upfront investments in hardware and infrastructure. Instead, users can pay for the resources they need on a usage basis. This makes it a cost-effective option, especially for small businesses or startups with limited budgets.

Secondly, IaaS provides scalability. Users can scale their infrastructure up or down according to their needs. This flexibility allows businesses to adapt quickly to changing demands or seasonal peaks in their operations.

Additionally, IaaS offers reliability and redundancy. Service providers typically have multiple data centers, ensuring that even if one data center experiences an issue, there is redundancy in place to maintain service availability.

IaaS also allows for greater agility and faster time-to-market. Users can provision and deploy virtual machines and other resources quickly, reducing the time it takes to set up and launch new applications or services. Additionally, IaaS enables users to easily scale their infrastructure up or down based on demand, providing the flexibility to meet changing business needs. This eliminates the need for costly and time-consuming hardware upgrades or acquisitions.

By leveraging IaaS, organizations can focus on their core competencies and strategic objectives, rather than getting bogged down by infrastructure management. They can benefit from the expertise of cloud service providers who handle all the operational aspects, such as maintenance, security, and updates.

Furthermore, IaaS offers improved reliability and disaster recovery capabilities. Cloud service providers usually have redundant data centers and backup systems in place, ensuring that data and applications remain available even in the event of hardware failures or natural disasters.

Overall, IaaS empowers businesses to quickly and efficiently deploy IT resources, allowing them to stay competitive in today's fast-paced digital landscape. It offers cost savings, scalability, reliability, and the freedom to focus on innovation and value creation.

Furthermore, IaaS offers enhanced security. Service providers invest heavily in implementing security measures to protect the infrastructure and customer data. They have expertise in managing security, backups, and disaster recovery.

In summary, IaaS is a type of cloud computing service that provides users with access to computing resources like virtual machines, storage, and networking. It offers the flexibility, scalability, and cost-effectiveness needed for businesses and individuals to build their own computing infrastructure without the need for on-premises hardware and maintenance. [1]//medium.com/@panchalmonil22/cloud-computing-services-a-beginners-guide-to-iaas-paas-and-saas-in-the-cloud-a37ba038455c" data-matched-result="eyJ0aXRsZSI6IkNsb3VkIENvbXB1dGluZyBTZXJ2aWNlczogQSBCZWdpbm5lclx1MjAxOXMgR3VpZGUgdG8gSWFhUywgUGFhUywgYW5kIFNhYVMgaW4gdGhlIENsb3VkIiwidXJsIjoiaHR0cHM6XC9cL21lZGl1bS5jb21cL0BwYW5jaGFsbW9uaWwyMlwvY2xvdWQtY29tcHV0aW5nLXNlcnZpY2VzLWEtYmVnaW5uZXJzLWd1aWRlLXRvLWlhYXMtcGFhcy1hbmQtc2Fhcy1pbi10aGUtY2xvdWQtYTM3YmEwMzg0NTVjIiwiaHRtbHNuaXBwZXQiOiJDbG91ZCBjb21wdXRpbmcgd29ya3MgYnkgZW5hYmxpbmcgY2xpZW50IGRldmljZXMgdG8gYWNjZXNzIGRhdGEgYW5kIGNsb3VkIC4uLiBUaGUgSWFhUyBjbG91ZCBtb2RlbCBpcyBjbG9zZXN0IHRvIGEgcmVtb3RlIGRhdGEgY2VudGVyIGZvciBidXNpbmVzcyB1c2Vycy4ifQ==">[2]

Cloud Computing and Software as a Service (SaaS)

Cloud Computing and Software as a Service (SaaS) have revolutionized the way businesses manage their IT infrastructure and deliver software applications to end-users. Cloud services, including SaaS, provide numerous benefits such as efficiency, cost reduction, scalability, and easy accessibility.

Cloud Computing is a paradigm that enables users to access and utilize computing resources and services over the internet, without the need for on-premises infrastructure. It allows organizations to store, manage, and process data in remote data centers, known as the cloud. The cloud eliminates the need for organizations to invest in expensive hardware equipment and infrastructure, as they can leverage the resources provided by cloud service providers.

One of the most widely known and commonly used cloud computing models is Software as a Service (SaaS). SaaS delivers software applications over the internet on a subscription basis. This means that users can access the applications through their web browsers without the need for installation or maintenance on their local devices. SaaS applications are typically accessed through a web portal, providing users with the flexibility to use the software from anywhere with an internet connection.

SaaS offers several advantages for both large companies and end-users. Firstly, it simplifies the software deployment process, as users can start using the application immediately without the need for complex installations or configurations. Moreover, SaaS eliminates the need for companies to hire large IT teams to troubleshoot and maintain the software, as these responsibilities are handled by the cloud service providers.

Additionally, SaaS enables cost reduction for organizations, as they no longer need to invest in purchasing and maintaining expensive software licenses. Instead, they can pay a subscription fee based on the number of users or the usage of the software. This subscription-based pricing model allows companies to scale their software usage as per their requirements, resulting in cost savings and increased operational efficiency.

Another key benefit of SaaS is its scalability. Cloud service providers can easily scale their infrastructure up or down based on the demand from users. This means that companies can quickly adjust their software usage and resources without the need for lengthy procurement processes or hardware upgrades. Scalability also ensures that organizations have the necessary computing power and performance to support their growth and changing business needs.

Furthermore, SaaS offers excellent reliability and accessibility. Cloud service providers ensure high uptime and data redundancy, which eliminates the risk of data loss or application downtime. Users can access their SaaS applications from any device with an internet connection, making it convenient for remote work or collaboration among teams.

In summary, Cloud Computing and Software as a Service (SaaS) have transformed the way businesses operate and deliver software applications. With cloud services, organizations can simplify their IT infrastructure, reduce costs, improve scalability, and enhance accessibility. SaaS, in particular, allows companies to access and use software applications without the need for complex installations or maintenance. It offers flexibility, cost savings, and reliable performance, making it a preferred choice for many businesses. By embracing Cloud Computing and SaaS, companies can enhance their productivity, efficiency, and overall success in the digital market. [1][2]

The Rise of Multi-Cloud Adoption

The rise of multi-cloud adoption is transforming the way enterprises manage their IT infrastructure. According to recent online data, it has been found that 36% of organizations worldwide are currently using multi-cloud solutions. This number is projected to grow significantly, with adoption expected to reach 64% within the next one to three years. For large enterprises with 5000 or more employees, the adoption rate is even higher, with 57% already leveraging multi-cloud environments.

These findings, derived from Nutanix's fourth annual study on global enterprise cloud adoption, highlight the growing trend of multi-cloud usage. This approach involves combining multiple clouds, be it public or private, to meet the diverse needs of businesses. It provides numerous benefits, such as enhanced scalability, improved performance, and increased flexibility.

While some may view a move towards multi-cloud as risky, the data strongly suggests that not adopting this approach may be even riskier in the long run. The cloud industry evolves rapidly, often outpacing the comfort levels of IT professionals. By leveraging multi-cloud solutions, enterprises can stay ahead of the curve and harness the full potential of cloud technology.

What sets multi-cloud apart from traditional cloud strategies is its ability to leverage different cloud providers and platforms simultaneously. This approach offers organizations greater freedom and choice in deploying specific workloads and applications. It allows them to optimize costs by using different providers based on specific requirements. For example, some workloads may perform better on one cloud platform while others may be more cost-effective on another.

Moreover, multi-cloud adoption enables businesses to mitigate risks and enhance security. By distributing workloads across multiple clouds, they reduce the likelihood of a single point of failure. If one cloud provider experiences an outage or security breach, the impact on the overall infrastructure is minimized. Additionally, organizations can enforce consistent security and data governance policies across all their cloud environments, ensuring comprehensive protection.

However, to fully realize the potential of multi-cloud, organizations must adopt tools and technologies that streamline operations and management in a unified manner. This means implementing solutions that automate cross-cloud operations and provide a centralized management interface. Streamlining processes and eliminating complexities will be a key focus for organizations in the coming year, according to the study.

Furthermore, it is crucial for organizations to take a strategic approach when designing their multi-cloud architecture. A one-size-fits-all approach no longer suffices in today's dynamic business landscape. Each workload and application has unique requirements and may perform optimally on different cloud environments. By adopting a strategic mindset, enterprises can tailor their IT infrastructure to align with specific business goals and objectives.

The rise of multi-cloud adoption signifies a paradigm shift in how enterprises approach cloud computing. It is no longer a question of whether to adopt the cloud but how to leverage multiple clouds effectively. This trend presents tremendous opportunities for businesses, enabling them to harness the power of diverse cloud platforms while mitigating risks and optimizing costs.

In conclusion, the data clearly shows that multi-cloud adoption is on the rise, with organizations realizing the importance of leveraging multiple cloud providers and platforms. The advantages offered by multi-cloud, such as scalability, performance, flexibility, and enhanced security, are driving its widespread adoption. To fully leverage multi-cloud environments, organizations must focus on simplifying operations, taking a strategic approach, and utilizing automation tools. As the cloud industry continues to evolve rapidly, multi-cloud adoption is poised to become the norm rather than the exception. [1][2]

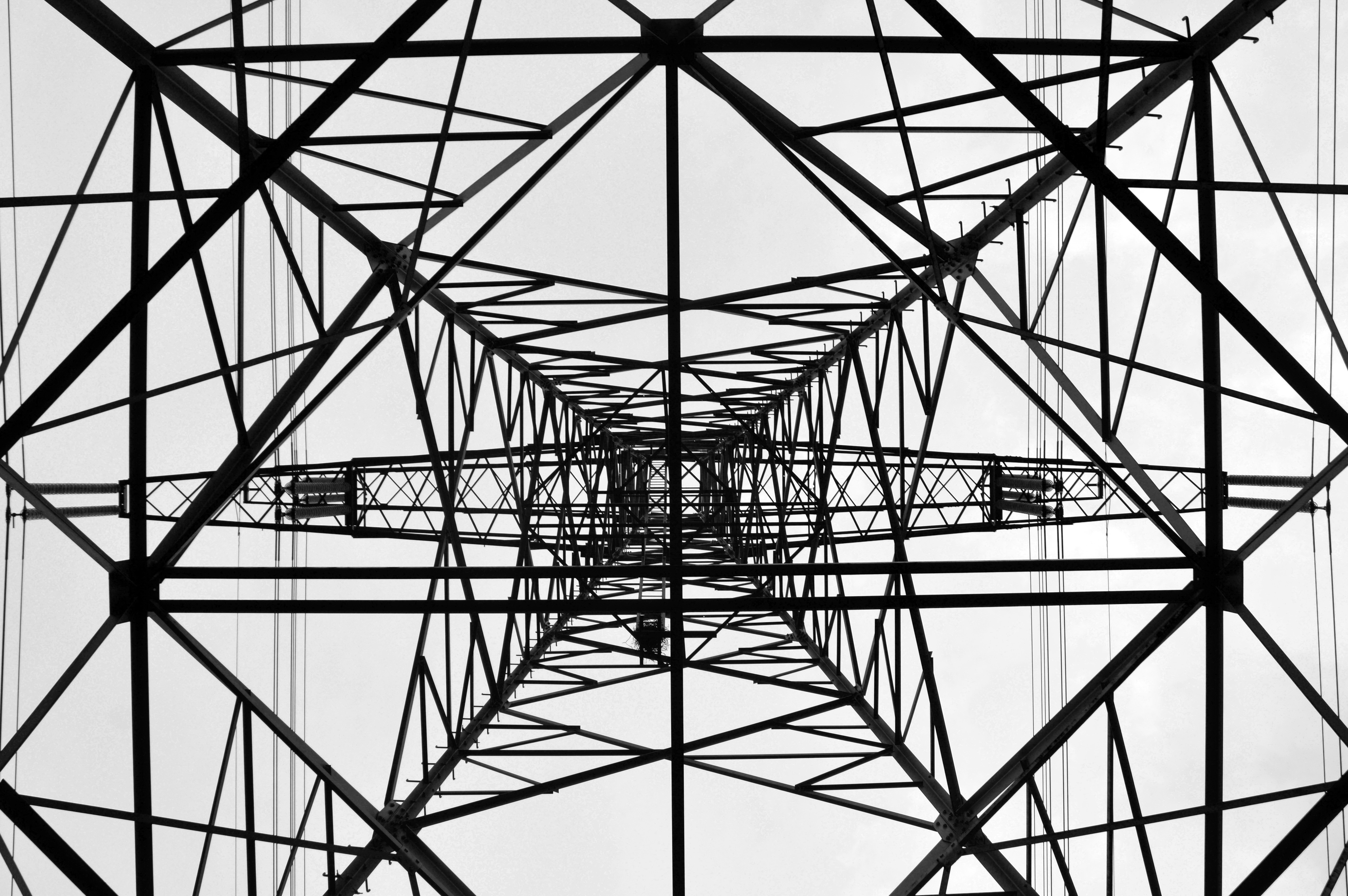

Cloud Computing and Network Infrastructure

Cloud computing has emerged as a transformative technology that has revolutionized the IT landscape. It has redefined how businesses and individuals utilize and manage their computing resources. Cloud technology has had a huge impact on the state of network-as-a-service business models over the last few years.

Cloud computing, as the name suggests, involves the distribution of computer services through the internet. Users can access and use different computing resources such as storage, processing power, and software programs using remote servers hosted by third-party providers, rather than depending on local servers or personal computers. These resources are created on-demand and accessed over a network connection, enabling distant businesses with cloud services that let consumers scale up or down their consumption based on their needs.

One of the key ways cloud computing has revolutionized the IT industry is through accessibility and teamwork. Access and cooperation obstacles have been removed through cloud computing. With the flexibility offered by cloud computing, users can access their applications and data from anywhere with an internet connection using a wide range of devices. This enhances productivity and enables remote work, as employees can collaborate seamlessly and access their work resources from various locations.

Cost efficiency is another significant advantage of cloud computing. It eliminates the need for large upfront hardware and software infrastructure investments. Instead, users pay for the resources they consume on a subscription or pay-as-you-go basis. This allows businesses to reduce costs, as they only pay for what they use, and easily scale up or down their resource consumption based on demand.

Cloud computing has also revolutionized the IT infrastructure by enabling organizations to scale up operations with unprecedented flexibility. By allowing on-demand access to a shared pool of programmable computer resources, cloud computing has made it possible for businesses to rapidly adjust their computing power and storage capacity as needed. This agility is particularly valuable in today's fast-paced and rapidly evolving business environment.

Furthermore, cloud computing has opened up new opportunities for innovation in the IT industry. With cloud technology, businesses can leverage advanced technologies such as artificial intelligence and big data analytics without the need for significant investments in infrastructure. Cloud providers offer a wide range of services and tools that enable businesses to harness the power of these technologies and gain valuable insights from their data, leading to enhanced competitiveness and growth.

In addition to its impact on businesses, cloud computing has also transformed how individuals consume and manage their personal computing resources. Cloud storage services, for example, allow individuals to store their files and data securely in the cloud, eliminating the need for physical storage devices. This provides convenience and peace of mind, as users can access their files from any device with an internet connection.

In conclusion, cloud computing has revolutionized the IT landscape by providing a flexible, cost-efficient, and scalable approach to computing resources. It has transformed how businesses and individuals access, manage, and utilize their computing resources, enabling greater accessibility, productivity, and innovation. As technology continues to develop, cloud computing is expected to remain a cornerstone of the IT sector, driving further advancements and opportunities for businesses and individuals alike. [1][2]

Cloud Computing and Blockchain Technology

Cloud Computing and Blockchain Technology are two transformative technologies that have significantly impacted various industries. Cloud Computing refers to the delivery of computing services and resources over the internet, allowing users to access and manipulate data and applications remotely. It offers flexibility, scalability, and cost-effectiveness, enabling businesses to streamline operations and optimize resource utilization. On the other hand, Blockchain Technology offers a decentralized and transparent way of recording and verifying transactions. It provides security, immutability, and traceability, making it ideal for industries like finance, supply chain, and healthcare.

In recent years, the integration of Cloud Computing and Blockchain Technology has gained significant attention. This integration leverages the strengths of both technologies, making it possible to build more secure and efficient solutions. Cloud-based blockchain platforms facilitate the development and deployment of decentralized applications (dApps), enabling organizations to harness the power of blockchain without the need for extensive infrastructure. Moreover, by utilizing cloud storage for blockchain data, the scalability and accessibility of blockchain networks can be improved, making them more widely usable.

The combination of Cloud Computing and Blockchain Technology has enormous potential across various industries. One of the key benefits is enhanced transparency and security. Businesses can leverage blockchain's distributed ledger to improve data integrity and mitigate cyber threats. By leveraging cloud-based blockchain solutions, organizations can ensure the availability and reliability of their systems while benefiting from the decentralized nature of blockchain technology.

Furthermore, the integration of these technologies enables efficient and cost-effective processes. The cloud provides the necessary computing power to validate and process blockchain transactions at a global scale, eliminating the need for expensive hardware and energy consumption. This synergy allows businesses to reduce operational costs while experiencing the benefits of blockchain technology.

In conclusion, the combination of Cloud Computing and Blockchain Technology presents a compelling opportunity for businesses across industries. The seamless integration of these technologies offers enhanced security, transparency, scalability, and cost-effectiveness. As a result, organizations can optimize their operations, improve efficiency, and unlock new levels of innovation. Embracing these technologies can lead to significant advantages in an increasingly digital and globalized world.

The integration of Cloud Computing and Blockchain Technology has the potential to revolutionize various industries. As both technologies continue to evolve and mature, the opportunities for innovative solutions and improved processes will only increase. The ability to harness the benefits of cloud-based blockchain platforms can provide businesses with a competitive edge, enabling them to adapt to a rapidly changing market and stay ahead of the curve. The transparency, security, and scalability offered by this integration are crucial in a world where data and digital transactions are becoming more prevalent. Overall, I believe that the combination of Cloud Computing and Blockchain Technology is a game-changer that will shape the future of technology, business, and society.

Understanding Cloud Computing Service Models

Cloud computing is one of the most talked-about topics in the modern era of computing. This technology has become increasingly popular in recent years and is being adopted by businesses of all sizes to enhance their operations. It offers various benefits that include cost savings, flexibility, scalability, and ease of use. On top of this, there are different service models within cloud computing that cater to specific needs and requirements. These service models are Software-as-a-Service (SaaS), Platform-as-a-Service (PaaS), and Infrastructure-as-a-Service (IaaS). Understanding these service models is crucial for businesses to make informed decisions about their cloud computing strategy. In this blog article, we look into ways businesses can optimize transportation routes with location intelligence technologies by understanding cloud computing service models.

What is Software-as-a-Service (SaaS)?

SaaS is a software delivery model that allows users to access applications through the internet on a subscription basis. With this model, businesses, regardless of their size can eliminate the need for on-premise installations and instead access software through a web browser. SaaS offers advantages that include lower upfront costs, seamless upgrades, and accessibility from any device. Some top examples of SaaS include Gmail, Microsoft Outlook, and Salesforce.

What is Platform-as-a-Service (PaaS)?

Like SaaS, PaaS, on the other hand, is an online service that provides a platform for developers to build, test, and deploy applications without necessarily being required to own or manage the underlying infrastructure. This service model offers tools and frameworks that simplify the development process. It allows developers to focus on developing applications instead of dealing with infrastructure issues. Some good examples of PaaS offerings include Google App Engine and Heroku.

Infrastructure-as-a-service

The last cloud service model is infrastructure-as-a-service (IaaS). With this model, businesses can access virtualized computing resources like servers, storage, and networks on a pay-per-use basis. IaaS allows businesses to outsource their infrastructure needs to a service provider, which reduces the need for on-premise data centers and hardware which are often expensive and unreachable, mainly for small businesses. IaaS offers scalability, flexibility, and cost-effectiveness which makes it a popular choice for startups and small businesses. Leading IaaS providers include Amazon Web Services (AWS), Microsoft Azure, and Google Compute Engine.

Each of the above cloud computing service models offers unique advantages and considerations. For instance, SaaS is ideal for businesses that require ready-to-use applications without installation and maintenance. For developers who want to focus on application development, PaaS is a great choice for them because it allows them to focus on development as opposed to focusing their energy on infrastructure management. On the other hand, IaaS is suitable for businesses that want to outsource their infrastructure needs and have greater control over their computing environment. With IaaS, businesses can pay for the infrastructure they need for their services or operations without breaking the bank to install data centers.

In conclusion, understanding the different cloud computing service models is essential for businesses looking to leverage the power of the cloud. Accordingly, businesses can maximize the benefits of cloud computing, such as cost savings, flexibility, and scalability, by aligning their needs with the appropriate service model. Whether it is SaaS, PaaS, or IaaS, cloud computing has undoubtedly become the future of computing, and businesses should embrace this technology to stay competitive in today's digital landscape. Therefore, regardless of the size of the business you are running, consider adopting any of the cloud models above for reduced cost, efficient service delivery, and flexibility. Furthermore, the cloud has proven effective in ensuring flexibility in businesses. This makes it excellent for small businesses that seek to grow with time and expand their infrastructure.

Cloud Computing: The Future of Business Strategy in 2024

In a world where technology is constantly evolving, businesses must adapt to stay ahead. According to PRWeb, cloud computing is set to revolutionize the way companies operate by 2024. This innovative approach to data storage and management offers both challenges and opportunities for businesses of all sizes.

While the idea of cloud computing may seem exciting, it's important to question how it will truly impact businesses. Will it truly improve efficiency and productivity, or will it create new challenges and vulnerabilities? Only time will tell, but it's important for companies to carefully consider the potential risks and benefits before fully embracing this new technology.

5 Tips for Securing Your Cloud Configuration - You Won't Believe #3!

In today's digital world, businesses of all sizes are turning to cloud computing for its efficiency and cost-effectiveness. But with this convenience comes the risk of cyberattacks and data breaches. That's why it's important to follow these 5 best practices for securing your cloud configuration, as shared by Security Intelligence News. From implementing a zero trust policy to staying updated on the latest threats, these tips will help keep your cloud environment safe and secure.

While these tips may seem helpful, it's important to remember that no system is completely foolproof. As a 5th grader, I know that even the best security measures can still be vulnerable to hackers and cybercriminals. It's always important to stay vigilant and be cautious when it comes to protecting sensitive information, especially in the ever-changing landscape of technology.

Is This Company Poised to Dominate the Booming Cloud Market?

HashiCorp has become a major player in the rapidly growing cloud computing industry, thanks to its multi-cloud and open-source automation tools. According to Seeking Alpha, the company's cloud-agnostic approach sets it apart from competitors and allows for seamless integration with multiple cloud platforms, avoiding vendor lock-in. With steady revenue growth and improved profitability, HashiCorp has proven its ability to thrive in this competitive market.

However, as with any company, there are always skeptics. While HashiCorp may have a strong foothold in the cloud market now, it's important to consider the ever-changing landscape of technology and the potential for new competitors to emerge. Only time will tell if HashiCorp can maintain its position as a leader in the cloud computing industry.

Discover the Future of Cloud Computing Services in 2024-2031

Get ready for the next big thing in technology! According to a recent report by MENAFN.com, the Cloud Computing Services market is expected to experience significant growth between 2024-2031. This market is constantly evolving and adapting to meet the needs of the industry, making it essential for companies to invest in research and development to stay ahead of the game. Keep reading to learn more about the latest trends and innovations in the Cloud Computing Services market.

As exciting as this news may be, it's important to approach it with a critical eye. While the report may provide valuable insights, it's always wise to consider multiple sources and do your own research before making any decisions. After all, the future is never certain and it's important to stay informed and cautious when it comes to new developments in the market.

Optimizing Cloud Resources with a Managed Service Provider

Cloud computing offers many benefits to organizations of all sizes. The flexibility and scalability of cloud solutions make them an excellent choice for companies with fluctuating or seasonal business requirements. Cloud service providers (CSPs) make cutting-edge technology available to customers without the capital costs associated with purchasing and maintaining hardware and software.

The wide variety of offerings available in the portfolios of CSPs can be difficult to navigate efficiently. Many companies need assistance in using cloud resources effectively to address business objectives. Fortunately, they can get the help they need by partnering with a managed service provider (MSP).

Benefits of Engaging a Managed Service Provider

A managed service provider is a third party that assumes responsibility for managing part or all of an organization’s IT infrastructure, applications, and systems. MSPs are often engaged by small and medium-sized businesses that do not have the time or personnel to manage their IT environment.

Following are some of the most beneficial aspects of working with an experienced cloud computing MSP.

- Selecting the appropriate cloud solutions - An MSP can help a company identify the cloud services and solutions that best fit the needs of the business. The MSP may suggest specific SaaS solutions to refresh the company’s technical capabilities. An MSP that has diverse experience with multiple cloud service providers (CSPs) will be able to guide a company toward the most effective solutions.

- Addressing gaps in technical skills - Companies can leverage the technical skills and resources of an MSP to fill gaps that may be affecting their ability to get the maximum value from a cloud computing investment. The skills available from an MSP enable companies to implement advanced technological solutions for which they have insufficient technical personnel.

- Controlling cloud computing costs - A major selling point promoted by CSPs is the savings available from cloud computing solutions. While substantial savings are indeed possible, inexperience or mismanagement can result in companies paying more than necessary for cloud services. Additional expenses for unnecessary capacity or services can quickly negate any of the financial benefits of the cloud. An experienced MSP will be able to help find the most cost-effective methods of using the cloud and help maximize an organization’s IT budget.

- Facilitating cloud migrations - Organizations may understand the advantages of migrating on-premises systems to the cloud but be apprehensive about actually making the move. Migration can be a challenging and intimidating experience. An experienced MSP can help guide a company through potential difficulties and be instrumental in supporting a successful migration.

- Managing cloud resources - A company’s cloud resources need to be efficiently managed to ensure performance, availability, and to minimize extraneous costs. Scalability comes at a price, and it is easy for an organization to end up using more capacity than they need for the business. An MSP has the experience to manage the environment so that all business objectives are met without incurring unnecessary costs.

Working with an MSP enables a company to focus on running its business without worrying about its cloud computing environment. Engaging an experienced and reliable MSP is an excellent way for smaller companies to get the most value from their IT investment and compete more effectively with larger rivals.

How Pen Testing as a Service Improves Your Security

Penetration testing, commonly known as pen testing, is a form of security testing in which the testers adopt the tactics of cybercriminals to attack a specific application, system, or network. The purpose of a pen test is to defeat existing security measures and identify vulnerabilities. Testers often search for combinations of vulnerabilities that, when used together, pose a greater threat than each individual vulnerability.

Large organizations with dedicated security teams typically have pen testing groups that methodically address their infrastructure components and applications. Smaller companies don’t always have that luxury. A lack of testing can put their environment at risk from sophisticated cyberthreats.

Penetration testing as a service (PTaaS) enables organizations of any size to reap the benefits of targeted vulnerability assessments. While it is similar to traditional pen testing, PTaaS exhibits some differences that may make it even more valuable for improving cybersecurity.

What is Pen Testing as a Service?

PTaaS is a cloud service in which IT professionals are provided with the resources necessary to perform continuous penetration tests and act upon their results to reduce security vulnerabilities. The objective of PTaaS is for companies to develop vulnerability management programs to identify, prioritize, and remediate cyberthreats before they can impact their environment.

PTaaS provides organizations with a flexible and agile method of performing on-demand pen testing. A PTaaS offering enables customers to leverage the skill of the provider’s pen testers to discover security vulnerabilities that pose risks to the infrastructure and data resources.

Customers typically can follow the progress of tests and view their results through a centralized dashboard. For comparison, data can be displayed before, during, and after a test. Vendors usually supply resources to assist in identifying vulnerabilities and determining effective remediations. This includes a knowledge base to assist in-house testers in remediating identified vulnerabilities and may also provide assistance from the individuals who performed the tests.

Benefits of Pen Testing as a Service

Multiple benefits are possible when using a PTaaS solution.

- Accelerated testing results and remediation - Traditional pen testing methods provided vulnerability assessments at the conclusion of the testing period. A PTaaS solution provides real-time access to testing data so vulnerabilities can be addressed promptly. Data related to a vulnerability can be monitored over time to evaluate remediation results.

- Flexible purchasing options - Vendors offer manual, automated, and hybrid PTaaS solutions that can be purchased through subscriptions or as on-demand services.

- Comprehensive reporting - The reporting capabilities of PTaaS solutions consolidate findings from multiple sources and can be tailored to meet organizational needs such as demonstrating compliance with regulations such as PCI-DSS.

Challenges of Employing a PTaaS Solution

Companies looking to use a PTaaS solution need to be aware of a few of its limitations and challenges.

- It may not be suitable for complex environments that require extensive expertise regarding specific domain technology.

- The solution may offer limited customization options that do not align with the testing requirements of specific systems.

- The ability to run additional testing cycles can identify new vulnerabilities before the previously found issues have been successfully addressed, putting more strain on security teams.

Multiple vendors such as NetSPI and BreachLock offer PTaaS solutions that may be right for your business. Companies should take a close look at what PTaaS has to offer. PTaaS is another tool to be deployed in the never-ending attempt to maintain a secure IT environment.

Why Cloud Service Providers May Put Servers in Outer Space

The major cloud service providers (CSPs) are continuously looking for innovative services and products to add to their portfolio of offerings. They are also constantly on the lookout for ways to trim their budgets, improve performance, and optimize resource utilization.

One of the directions they are looking is straight up with a variety of offerings that address the growth of space-based, commercial enterprises and satellite technology. Let’s look at some of the ways two CSPs are planning to leverage the opportunities of the final frontier.

Azure Space combines the possibilities of space with the power of cloud computing. The platform includes several distinct products that employ space infrastructure to extend Azure capabilities and help space-based companies process satellite data efficiently.

Following are two of the offerings from Azure Space.

The Ground Station provides low-latency connectivity to extend communication coverage between a company’s satellites and the Azure Cloud. The features of Azure Orbital Ground Station let customers:

- Operate faster by employing a global network of antennas that scale to address satellite fleet growth;

- Streamline satellite management using Azure cloud services and ground stations;

- Make use of secure and resilient ground stations for satellite communication;

- Control costs by only paying for bandwidth that is consumed on-demand.

This service enables customers to downlink space-borne data from multiple sources and store it in Azure Data Platform components. The raw data can then be converted into analysis-ready data using the Azure Orbital Analytics processing pipeline. Integrations with Microsoft tools and partner AI models allow customers to derive insights from satellite imagery and use them productively with Microsoft Teams or open source tools. This platform serves as the path between Microsoft customers and satellite operators.

Amazon is working on multiple space-based solutions for its customers as well as experimenting with new ways to provide its services via satellites. The following are two examples of the innovative use of space technology by AWS.

AWS Sound Station provides real-time processing of data using a global network of antennas that enable communication with a satellite to be established multiple times per orbit. High-resolution imagery can be refreshed more regularly for enhanced monitoring and change detection. The features of this offering include:

- Ground station as a service which eliminates purchase and maintenance expenses;

- Premium data and physical security;

- Fast downloads and immediate data processing;

- Self-service scheduling with customers only paying for the antenna time they use.

In November 2022, AWS announced it has successfully run a suite of AWS software on an orbiting satellite. This innovative experiment is the first of its kind and was conducted in low Earth orbit over the previous 10 months. The company is testing a more efficient and faster way for customers to capture and analyze space data directly on AWS satellites using the cloud.

AWS edge capabilities on an orbiting satellite allow customers to analyze raw data in orbit and only downlink the most important images for later analysis. Communication with satellites will be more efficient with operators using familiar AWS tools.

These are just a few examples of cloud computing expanding into space. The large CSPs are driving innovation and opening the door for space data to be used more effectively to address important issues that affect business and society.

What Are Deep Sea Data Centers and Do They Matter?

The cloud in cloud computing is an abstract concept that does not mean that servers and storage devices are floating around, hidden in fluffy congregations of water vapor. But while the current computing environment may not incorporate those cumulus clouds floating across the sky, you might be surprised to learn that there is a lot of interest in locating servers deep in the ocean.

The terrestrial data centers that make up the cloud require an incredible amount of electricity for power and millions of gallons of water for cooling the computers and peripheral devices they house. These considerations influence where large cloud data centers are built, as they need access to sufficient energy and water resources.

One might wonder why anyone would want to put computer servers in the ocean. But when you think about it, it makes a lot of sense. The ocean provides an abundant source of renewable energy and, by its very nature, plenty of water for cooling hot equipment. Building on the ocean floor does not require rerouting water resources that might be needed for agriculture or other human endeavors.

Microsoft has explored the concept of submerged servers since 2015 when it established Project Nantick to research the feasibility of housing data centers on the ocean floor and powering them with offshore renewable energy. The tests were conducted off the coast of Orkney, Scotland at the European Marine Energy Center.

The company followed up an initial experiment with a second phase which deployed an underwater data center four times larger than phase one with 36 times its computing power. The dimensions of the pressure vehicle containing the servers were a little over 12 meters in length and 3 meters in diameter, about the size of a 40-foot shipping container. Its electrical power consumption was 240 KW, all of which was locally produced by renewable wind, solar, tidal, and wave sources.

Nantick Phase II consisted of 12 racks containing 864 standard Microsoft data center servers and 27.6 petabytes of disk space. It has the computing power of a combined several thousand consumer PCs and storage space for around five million movies.

The second phase of project Nantick concluded on July 9th, 2020. What Microsoft learned from its testing has potentially paradigm-shifting ramifications for the construction of future data centers.

Advantages of Under Sea Data Centers

Following are some of the advantages that Microsoft’s experiments have demonstrated.

- Increased reliability - The use of nitrogen rather than oxygen for the vessel’s atmosphere combined with the absence of people potentially bumping into equipment led to a failure rate of 1/8 that of a land-based control group.

- Consumer benefits - Data centers modeled after Nantick can be provisioned in 90 days. They can reduce latency by being located closer to the consumers using them. A large percentage of the world’s population lives near the coastline, making it an attractive audience for deep sea data centers.

- Societal benefits - Renewable energy and intelligent manufacturing using recycled and recyclable materials provide a zero-emission and sustainable path for data center construction.

Microsoft plans to use the knowledge gained from Project Nantick to improve the sustainability of its more traditional data centers. While no commercial product has yet to be announced, the future of deep sea data centers looks promising for the benefits they can provide consumers and society.

Popular Articles

- Most read